Generate APEX_DEBUG Calls to Trace Parameter Values for whole packages

APEX API Tracer enables the tracing of calls to package procedures or functions into the APEX Debug Log without manual programming.

This program can generate a package for tracing automatically when the following conditions apply:

- the package is accessible to the schema user via a synonym.

- the procedure or function is listed in the package header.

- the package header is not wrapped.

The enable - procedure will generate a package with the same name as the synonym in your local schema.

The link from your local applications to the synonym will be intercepted by the generated package.

The package will contain the same functions and procedures as the original package.

When the Option 'Logging of Procedure Start' is set to Yes, then each function and procedure consists of:

- A call to APEX_DEBUG.LOG_LONG_MESSAGE to produce log entries with text for valid PL/SQL procedure calls with enquoted parameters, that you can copy and paste into the sql console for testing. Arguments of type IN and IN/OUT are logged.

- A call to invocate the original procedure or functions.

- A call to APEX_DEBUG.LOG_LONG_MESSAGE to produce log entries for the output values and return values of the invocation. Arguments of type OUT and IN/OUT are logged.

When the Option 'Logging of Procedure Start' is set to No, then each function and procedure consists of:

- A call to invocate the original procedure or functions.

- A call to APEX_DEBUG.LOG_LONG_MESSAGE to produce log entries for all argument values and function return values of the invocation. Arguments of type IN, OUT, and IN/OUT are logged.

For other packages where the above-mentioned conditions do not apply, you can manually add generated code with invocations to the api_trace package to support logging for prepared functions or procedures in your own packages.

Usage

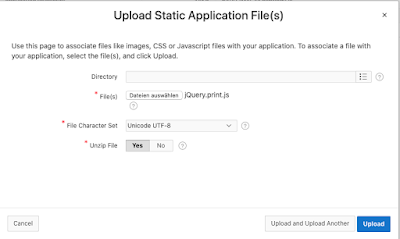

Install the APEX application with supporting objects.

Start the Application and use the Switch in the column 'Tracing is Enabled' to start and stop the tracing of a package. The app will list for some packages the grant statements that you have to execute as an admin.

Example output for the package APEX_LANG:

create or replace NONEDITIONABLE PACKAGE "APEX_LANG"

is

-- This package was generated by package_tracer from Strack Software Development, Berlin, Germany.

-- It replaces : PUBLIC SYNONYM "APEX_LANG" for "APEX_190100"."HTMLDB_LANG"

function message (

p_name in varchar2 default null,

p0 in varchar2 default null,

p1 in varchar2 default null,

p2 in varchar2 default null,

p3 in varchar2 default null,

p4 in varchar2 default null,

p5 in varchar2 default null,

p6 in varchar2 default null,

p7 in varchar2 default null,

p8 in varchar2 default null,

p9 in varchar2 default null,

p_lang in varchar2 default null,

p_application_id in number default null)

return varchar2

;

procedure message_p (

p_name in varchar2 default null,

p0 in varchar2 default null,

p1 in varchar2 default null,

p2 in varchar2 default null,

p3 in varchar2 default null,

p4 in varchar2 default null,

p5 in varchar2 default null,

p6 in varchar2 default null,

p7 in varchar2 default null,

p8 in varchar2 default null,

p9 in varchar2 default null,

p_lang in varchar2 default null,

p_application_id in number default null)

;

function lang (

p_primary_text_string in varchar2 default null,

p0 in varchar2 default null,

p1 in varchar2 default null,

p2 in varchar2 default null,

p3 in varchar2 default null,

p4 in varchar2 default null,

p5 in varchar2 default null,

p6 in varchar2 default null,

p7 in varchar2 default null,

p8 in varchar2 default null,

p9 in varchar2 default null,

p_primary_language in varchar2 default null)

return varchar2

;

procedure create_message(

p_application_id in number,

p_name in varchar2,

p_language in varchar2,

p_message_text in varchar2 )

;

procedure update_message(

p_id in number,

p_message_text in varchar2 );

procedure delete_message(

p_id in number );

procedure update_translated_string(

p_id in number,

p_language in varchar2,

p_string in varchar2);

procedure seed_translations(

p_application_id in number,

p_language in varchar2 );

procedure create_language_mapping(

p_application_id in number,

p_language in varchar2,

p_translation_application_id in number);

procedure update_language_mapping(

p_application_id in number,

p_language in varchar2,

p_new_trans_application_id in number);

procedure delete_language_mapping(

p_application_id in number,

p_language in varchar2);

procedure publish_application(

p_application_id in number,

p_language in varchar2,

p_new_trans_application_id in number default null );

procedure emit_language_selector_list;

end APEX_LANG;

/

create or replace NONEDITIONABLE PACKAGE BODY "APEX_LANG"

IS

function message (

p_name in varchar2 default null,

p0 in varchar2 default null,

p1 in varchar2 default null,

p2 in varchar2 default null,

p3 in varchar2 default null,

p4 in varchar2 default null,

p5 in varchar2 default null,

p6 in varchar2 default null,

p7 in varchar2 default null,

p8 in varchar2 default null,

p9 in varchar2 default null,

p_lang in varchar2 default null,

p_application_id in number default null)

return varchar2

is

lv_result VARCHAR2(32767);

begin

lv_result := apex_190100.htmldb_lang.message(p_name,p0,p1,p2,p3,p4,p5,p6,p7,p8,p9,p_lang,p_application_id);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.message('

|| 'p_Name=>' || api_trace.Literal(p_Name)

|| ', p0=>' || api_trace.Literal(p0)

|| ', p1=>' || api_trace.Literal(p1)

|| ', p2=>' || api_trace.Literal(p2)

|| ', p3=>' || api_trace.Literal(p3)

|| ', p4=>' || api_trace.Literal(p4)

|| ', p5=>' || api_trace.Literal(p5) || chr(10)

|| ', p6=>' || api_trace.Literal(p6)

|| ', p7=>' || api_trace.Literal(p7)

|| ', p8=>' || api_trace.Literal(p8)

|| ', p9=>' || api_trace.Literal(p9)

|| ', p_Lang=>' || api_trace.Literal(p_Lang)

|| ', p_Application_Id=>' || api_trace.Literal(p_Application_Id) || ')' || ' returns '

|| api_trace.Literal(lv_result), p_level=>5);

return lv_result;

end message;

procedure message_p (

p_name in varchar2 default null,

p0 in varchar2 default null,

p1 in varchar2 default null,

p2 in varchar2 default null,

p3 in varchar2 default null,

p4 in varchar2 default null,

p5 in varchar2 default null,

p6 in varchar2 default null,

p7 in varchar2 default null,

p8 in varchar2 default null,

p9 in varchar2 default null,

p_lang in varchar2 default null,

p_application_id in number default null)

is

begin

apex_190100.htmldb_lang.message_p(p_name,p0,p1,p2,p3,p4,p5,p6,p7,p8,p9,p_lang,p_application_id);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.message_p('

|| 'p_Name=>' || api_trace.Literal(p_Name)

|| ', p0=>' || api_trace.Literal(p0)

|| ', p1=>' || api_trace.Literal(p1)

|| ', p2=>' || api_trace.Literal(p2)

|| ', p3=>' || api_trace.Literal(p3)

|| ', p4=>' || api_trace.Literal(p4)

|| ', p5=>' || api_trace.Literal(p5) || chr(10)

|| ', p6=>' || api_trace.Literal(p6)

|| ', p7=>' || api_trace.Literal(p7)

|| ', p8=>' || api_trace.Literal(p8)

|| ', p9=>' || api_trace.Literal(p9)

|| ', p_Lang=>' || api_trace.Literal(p_Lang)

|| ', p_Application_Id=>' || api_trace.Literal(p_Application_Id) || ')', p_level=>5);

end message_p;

function lang (

p_primary_text_string in varchar2 default null,

p0 in varchar2 default null,

p1 in varchar2 default null,

p2 in varchar2 default null,

p3 in varchar2 default null,

p4 in varchar2 default null,

p5 in varchar2 default null,

p6 in varchar2 default null,

p7 in varchar2 default null,

p8 in varchar2 default null,

p9 in varchar2 default null,

p_primary_language in varchar2 default null)

return varchar2

is

lv_result VARCHAR2(32767);

begin

lv_result := apex_190100.htmldb_lang.lang(p_primary_text_string,p0,p1,p2,p3,p4,p5,p6,p7,p8,p9,p_primary_language);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.lang('

|| 'p_Primary_Text_String=>' || api_trace.Literal(p_Primary_Text_String)

|| ', p0=>' || api_trace.Literal(p0)

|| ', p1=>' || api_trace.Literal(p1)

|| ', p2=>' || api_trace.Literal(p2)

|| ', p3=>' || api_trace.Literal(p3)

|| ', p4=>' || api_trace.Literal(p4)

|| ', p5=>' || api_trace.Literal(p5) || chr(10)

|| ', p6=>' || api_trace.Literal(p6)

|| ', p7=>' || api_trace.Literal(p7)

|| ', p8=>' || api_trace.Literal(p8)

|| ', p9=>' || api_trace.Literal(p9)

|| ', p_Primary_Language=>' || api_trace.Literal(p_Primary_Language) || ')' || ' returns '

|| api_trace.Literal(lv_result), p_level=>5);

return lv_result;

end lang;

procedure create_message(

p_application_id in number,

p_name in varchar2,

p_language in varchar2,

p_message_text in varchar2 )

is

begin

apex_190100.htmldb_lang.create_message(p_application_id,p_name,p_language,p_message_text);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.create_message('

|| 'p_Application_Id=>' || api_trace.Literal(p_Application_Id)

|| ', p_Name=>' || api_trace.Literal(p_Name)

|| ', p_Language=>' || api_trace.Literal(p_Language)

|| ', p_Message_Text=>' || api_trace.Literal(p_Message_Text) || ')', p_level=>5);

end create_message;

procedure update_message(

p_id in number,

p_message_text in varchar2 )

is

begin

apex_190100.htmldb_lang.update_message(p_id,p_message_text);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.update_message('

|| 'p_Id=>' || api_trace.Literal(p_Id)

|| ', p_Message_Text=>' || api_trace.Literal(p_Message_Text) || ')', p_level=>5);

end update_message;

procedure delete_message(

p_id in number )

is

begin

apex_190100.htmldb_lang.delete_message(p_id);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.delete_message('

|| 'p_Id=>' || api_trace.Literal(p_Id) || ')', p_level=>5);

end delete_message;

procedure update_translated_string(

p_id in number,

p_language in varchar2,

p_string in varchar2)

is

begin

apex_190100.htmldb_lang.update_translated_string(p_id,p_language,p_string);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.update_translated_string('

|| 'p_Id=>' || api_trace.Literal(p_Id)

|| ', p_Language=>' || api_trace.Literal(p_Language)

|| ', p_String=>' || api_trace.Literal(p_String) || ')', p_level=>5);

end update_translated_string;

procedure seed_translations(

p_application_id in number,

p_language in varchar2 )

is

begin

apex_190100.htmldb_lang.seed_translations(p_application_id,p_language);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.seed_translations('

|| 'p_Application_Id=>' || api_trace.Literal(p_Application_Id)

|| ', p_Language=>' || api_trace.Literal(p_Language) || ')', p_level=>5);

end seed_translations;

procedure create_language_mapping(

p_application_id in number,

p_language in varchar2,

p_translation_application_id in number)

is

begin

apex_190100.htmldb_lang.create_language_mapping(p_application_id,p_language,p_translation_application_id);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.create_language_mapping('

|| 'p_Application_Id=>' || api_trace.Literal(p_Application_Id)

|| ', p_Language=>' || api_trace.Literal(p_Language)

|| ', p_Translation_Application_Id=>' || api_trace.Literal(p_Translation_Application_Id) || ')', p_level=>5);

end create_language_mapping;

procedure update_language_mapping(

p_application_id in number,

p_language in varchar2,

p_new_trans_application_id in number)

is

begin

apex_190100.htmldb_lang.update_language_mapping(p_application_id,p_language,p_new_trans_application_id);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.update_language_mapping('

|| 'p_Application_Id=>' || api_trace.Literal(p_Application_Id)

|| ', p_Language=>' || api_trace.Literal(p_Language)

|| ', p_New_Trans_Application_Id=>' || api_trace.Literal(p_New_Trans_Application_Id) || ')', p_level=>5);

end update_language_mapping;

procedure delete_language_mapping(

p_application_id in number,

p_language in varchar2)

is

begin

apex_190100.htmldb_lang.delete_language_mapping(p_application_id,p_language);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.delete_language_mapping('

|| 'p_Application_Id=>' || api_trace.Literal(p_Application_Id)

|| ', p_Language=>' || api_trace.Literal(p_Language) || ')', p_level=>5);

end delete_language_mapping;

procedure publish_application(

p_application_id in number,

p_language in varchar2,

p_new_trans_application_id in number default null )

is

begin

apex_190100.htmldb_lang.publish_application(p_application_id,p_language,p_new_trans_application_id);

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.publish_application('

|| 'p_Application_Id=>' || api_trace.Literal(p_Application_Id)

|| ', p_Language=>' || api_trace.Literal(p_Language)

|| ', p_New_Trans_Application_Id=>' || api_trace.Literal(p_New_Trans_Application_Id) || ')', p_level=>5);

end publish_application;

procedure emit_language_selector_list

is

begin

apex_190100.htmldb_lang.emit_language_selector_list;

apex_debug.log_long_message(p_message=>'API: ' || 'apex_lang.emit_language_selector_list', p_level=>5);

end emit_language_selector_list;

END APEX_LANG;

/

For some APEX APIs you have to grant access to the following objects:

GRANT EXECUTE ON APEX_<version>.WWV_FLOW_SECURITY TO <schema_name>;

GRANT EXECUTE ON APEX_<version>.WWV_FLOW_THEMES TO <schema_name>;

GRANT EXECUTE ON APEX_<version>.WWV_FLOW_THEME_STYLES TO <schema_name>;

Start an APEX application that you want to trace and execute a page with debugging LEVEL5 enabled. The debug log will now contain entries that list the invocations with input and output parameters for the prepared packages.

Example APEX Debug Log with API Calls:

Trace Code for LOCAL packages

Here you can list invocations to the package api_trace for your own local packages.

The switches 'Compact' and 'Logging of Procedure Start' control the code generation for your need.

Copy the listed code into your package to enable tracing of your procedures and functions.

The generated code uses the api_trace. Literal function to convert the passed values to quoted literals.

The function api_trace.Literal is overloaded to support many data types. Datatypes that can not be converted like records are logged with a <datatype x> placeholder. The logged values are truncated to a maximum length.

The compact form of the generated code is executed directly with the simple passing of the function arguments list.

The number of arguments will be checked at runtime to match the count of arguments of the current calling function.

Publish Application Objects in other Schema

Here you can produce a mirror copy of a schema.

The target schema name should be a new empty schema, that was created in the APEX Admin / Workspace to Schema Assignment page.

In the copy tables and views are present as views that access the original tables and views.

Other objects like packages, functions, procedures, and types are represented as synonyms.

An application that can run in the original schema will be able to run in the copied schema too.

The application can be downloaded in the following Github repository:

https://github.com/dstrack/APEX_API_Tracer

A demo of the app can be found here:

https://yboieokpjit8ejk-strackdev02.adb.eu-frankfurt-1.oraclecloudapps.com/ords/f?p=104:9999

Name: demo Password: Simple/8021